The concept of the movement of the hour hands appears logical and comprehensible, as we have been exposed to various clock faces since our early years, without ever questioning the rationale behind their direction or the functioning of clocks in general. It may appear that the decision to move the clock’s hands was possibly based on certain technological considerations or even made arbitrarily, without any deeper significance to be found. However, in reality, there is indeed a significance, but it lies not within the construction of the clock itself, but rather in the historical practices of humanity. In this article, we will delve further into this matter and provide you with more details.

To start with, let us establish the notion of a clock, as it is an exceedingly prevalent and practical contraption capable of indicating time for numerous years. It is worth mentioning that clocks vary significantly: electronic, quartz, pendulum, mechanical. The latter design is still relatively popular in the present day.

Measuring Time: A Closer Look

In order to quantify the passage of time, a specialized device known as a regulator is required. The gears responsible for tracking seconds, minutes, and hours consist of interconnected wheels with small prongs, which are in turn connected to the clock’s hands. Additionally, there is a designated numerical value and unit of measurement for tracking seconds, which collectively form a fraction of a complete day. A day, in turn, is determined by the Earth’s rotation on its own axis, a process that occurs uniformly regardless of external influences.

These regulators are designed to measure time intervals equal to one second or its fractions, such as one fourth or one fifth. If the regulator is used to measure time intervals that are significantly shorter than these values, the clock hands will start to move faster, causing the time to increase. Conversely, if the set intervals are much longer, the clock will start to lag behind. It is also important to note that the main defining feature of mechanical watches is their continuous movement.

The origins of the first timepieces and how they function

However, let us return to the initial question posed in this discussion: why is the clockwise direction, as we know it today, from left to right? The tale begins with the ancient Egyptians, who were responsible for creating the first timekeeping mechanisms. Although it is important to note that these devices were not mechanical, but rather relied on the movement of the sun. Sunny weather served as the inspiration for this remarkable invention.

Throughout history, individuals have always had a fascination with the stars and other celestial bodies. The Egyptians, like people today, were particularly intrigued by the sun and its movements across the sky. After careful observation, they noticed that while the sun moved, the shadows of people and objects would also shift accordingly. This realization led to the development of the sundial concept. To create a sundial, all that was needed was a vertical stone pillar and some markings on the ground to indicate the time.

In the future, as time progressed, people desired more accurate and precise measurements. This was especially important because the sun’s position changes slightly every month, requiring the timeline markings to be constantly adjusted.

Origin of mechanical timepieces

Over 900 years ago, the first mechanical timepieces emerged. These innovative devices swiftly gained popularity and were installed on the towers of major European cities and other communities. Initially, their mechanisms were rudimentary and lacked precision, resulting in timepieces with a single hand moving in what is now known as a “clockwise” direction.

Nevertheless, the issue of selecting this specific hand direction remained unresolved. The matter at hand is that those mechanized clocks in Europe emerged as the most direct heir of the sundial, which functioned by casting a shadow of the sun on the earth. Consequently, the shadow would move in synchrony with the sun, hence the determination to apply this principle to mechanical clocks and subsequently to all subsequent timepieces.

There was also the potential for a different result

The reason why clocks invented in Europe had their hands moving in a specific direction is not without significance. In the opposite hemisphere, sundials operated in the opposite direction. Therefore, if the invention and popularity of mechanical watches had originated in those regions, it is highly likely that contemporary models would display time in the reverse direction. For instance, had Australia been the hub for watch innovation and advancement, it is probable that we would have transitioned to a right-to-left model many years ago.

Did you know that there have been attempts to prove that the movement of clock hands can be based on a different principle? Surprisingly, these attempts were only successful on the Prague and Jewish Town Halls. There is a theory that suggests the clock hands move from right to left, similar to the reading technique of the people of Israel. Some believe that an “antichord” movement (opposite to our conventional clock hands) would be a great innovation for left-handed individuals who perform most tasks with their left hand. You can even find some models based on this principle in stores, but they are unlikely to gain widespread popularity as most people have already transitioned to electronic systems.

💯 Check out our Telegram-channel 🤩 where you can find entertaining and useful products, along with exclusive promo codes and coupons (Telegram: WhatElephant).

We are open to collaborations and product reviews:

Email: [email protected]

Telegram: @https://t.me/niyazkalimullin

If you have any offers or proposals for collaboration, feel free to reach out to us via email or Telegram.

Don’t forget to check out our YouTube channel for video reviews!

Attempting to provide an exact explanation: what exactly is time? The concept of time is a topic that the mind ponders, attempting to comprehend, yet it proves challenging to formulate a precise definition. In philosophy, physics, and metrology, there exist various concepts and interpretations of time.

Classical mechanics and the theory of relativity employ entirely distinct conceptions of time. In the former case, time characterizes the sequence of events occurring within three-dimensional space. In the latter case, time is also regarded as the fourth coordinate.

However, let’s start from the beginning. Let’s explore the history of time measurement and delve into why the second is considered the smallest unit. Additionally, we will examine the concept of time in the field of physics and discuss the phenomena of relativistic and gravitational time dilation.

The Definition of Time

Time is an inherent natural phenomenon characterized by its constant flow. As time progresses, the world undergoes continuous transformations and various events unfold. Therefore, in the realm of physics, it is essential to understand time within the context of events.

Without any events taking place, time loses its conventional significance and essentially ceases to exist. In other words:

Time serves as a metric for the changes that occur in our surroundings. It governs the duration of objects’ existence, the alterations in their states, and the processes unfolding within them.

In the SI system, time is measured in seconds and represented by the letter t.

How Was Time Measured in the Past?

To measure time, a recurring event with a consistent duration is necessary. For instance, the alternation of day and night. Every day, the sun rises in the east and sets in the west, while the moon undergoes a complete cycle of illumination phases by the sun within a synodic month – from a thin crescent to a full moon.

A synodic month refers to the time between two consecutive new moons, during which the Moon completes one orbit around the Earth.

Ancient civilizations relied on celestial bodies and associated phenomena, such as the changing of days, nights, and seasonal cycles, to keep track of time.

As time went on, advancements were made in the measurement of time, leading to the development of various types of clocks including solar, water, sand, fire, mechanical, electronic, and eventually molecular clocks.

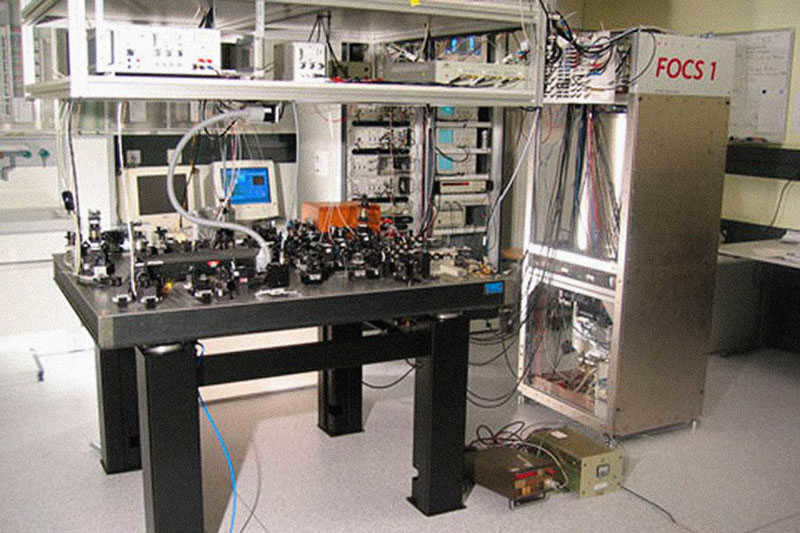

The time measurement of the FOCS 1 clocks in Switzerland is incredibly precise, with an accuracy of approximately one second over a span of 30 million years. This clock is renowned for its exceptional accuracy, but even after 30 million years, it will eventually need to be “reset.”

What is the reason behind having 60 minutes in an hour, 60 seconds in a minute, and 24 hours in a day?

Let’s acknowledge right away that the following is largely a personal speculation of the author, based on historical evidence. If our readers have any clarifications or questions, we would be happy to address them in the discussions.

Ancient civilizations needed a foundation for constructing their numeral systems. In Babylon, the number 60 served as such a foundation.

Due to the influence of the Sumerians, who invented the hexadecimal numeral system, and its subsequent adoption by Ancient Babylon, a circle was divided into 360 degrees, a degree into 60 minutes, and a minute into 60 seconds.

A circle can be used to represent a year, with each degree on the circle corresponding to a day. It is believed that the number 360 was chosen because there are 365 days in a year, and it was rounded up to the nearest degree. 360.

In ancient times, the hour was the shortest unit of time. An hour. The Babylonians, known for their mathematical prowess, decided to introduce smaller units of time based on their favorite number, 60. As a result, there are 60 minutes in an hour and 60 seconds in a minute. 60.

The greatest time unit of measurement

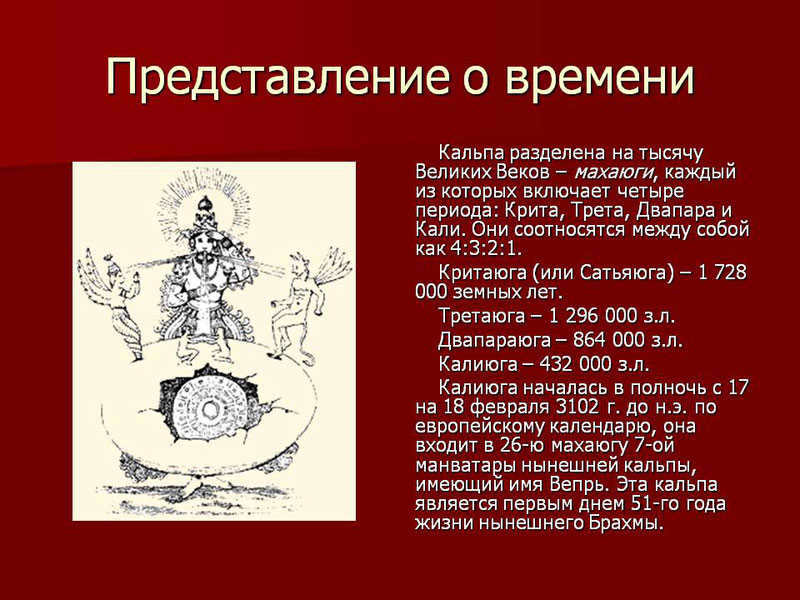

The greatest time unit of measurement, known as kalpa, derives from Hinduism and Buddhism. It corresponds to roughly 4.32 billion years, aligning closely with the age of the Earth. 5%.

The origins of these immense numerical values remain a mystery. However, the entire system suggests that ancient Hindus possessed a deeper understanding of the cosmos than we currently possess.

In Hinduism, the period known as Kalpa is often referred to as “Brahma’s day.” This day is followed by a night of equal length. A month is comprised of 30 days and nights, while a year consists of 12 months. Brahma’s entire lifespan is said to span 100 years, at which point the world ceases to exist.

If we were to convert Brahma’s hundred years into our conventional years, the result would be a staggering 311 trillion and 40 billion years! The current age of Brahma is 51 years.

Therefore, if all of this holds true, there is no need to be concerned – the universe will continue to exist for an extensive period of time.

According to the Guinness Book of World Records, the kalpa is recognized as the largest unit of time measurement.

The original timepiece

In the beginning, a simple stick sufficed as a means of marking the passage of time. By carving notches into the stick with a stone axe, people could keep track of the days. However, this was more of a calendar than a true clock.

The first clocks to emerge were sundials, which date back to ancient times. These clocks relied on the movement of the sun and the changing length of shadows cast by objects. Essentially, a gnomon—a long pole inserted into the ground—acted as the indicator. Solar clocks were widely used in ancient Egypt and China, with evidence of their existence as early as 1200 BC.

Next came the invention of water clocks, sand clocks, and fire clocks. These devices did not rely on the movements of the stars and were used as the primary means of measuring time.

The Chinese craftsmen were the first to create mechanical clocks in the year 725. However, mechanical clocks did not become widely used until much later.

In medieval Europe, mechanical clocks were installed in the towers of cathedrals and had a single hand that indicated the hour. Pocket watches were not invented until 1675 (patented by Huygens), and wristwatches were developed even later.

The initial wristwatches were originally designed for women and had elaborate decorations. However, their accuracy was often flawed with significant errors. Men of that time would not consider wearing such a wristwatch as it was deemed unsuitable for their image.

Contemporary timepieces

In the present day, both men and women wear mechanical or electronic watches, which offer a much higher level of time accuracy. Nevertheless, the most precise timekeeping devices are atomic clocks, also known as molecular or quantum clocks.

As we all know, a certain type of recurring process is necessary in order to establish a unit of time. At one point in history, the shortest unit was the day, meaning that the unit of time was determined by the regularity of sunrises and sunsets. Eventually, the smallest unit became the hour, and so on.

Since 1967, the international system SI has defined one second as the duration of electromagnetic radiation emitted during the transition between the hyperfine levels of the ground state of a specific atom, cesium-133. In particular, one second is equal to 9 192 631 770 of these periods.

Concept of Time in Physics

Currently, there is no definitive and unified understanding of the concept of time in physics.

In the realm of classical mechanics, time is regarded as a continuous and inherently unknowable aspect of the universe.

Time is measured using some recurring sequence of events. In classical physics, time remains unchanged regardless of the reference frame, meaning that events occur simultaneously in all systems.

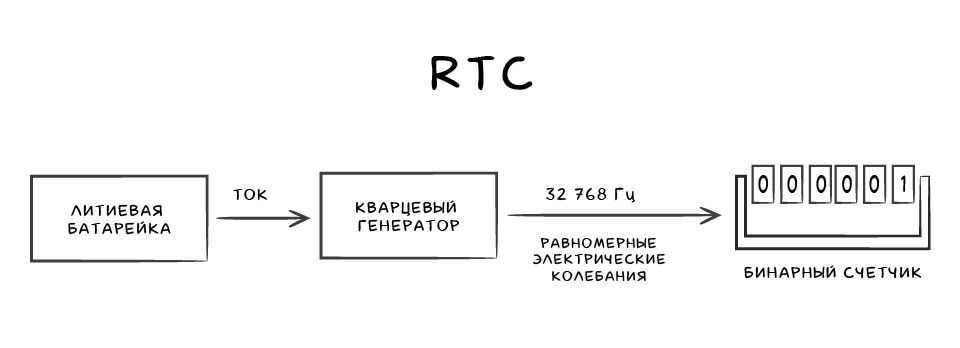

So, how do we determine time in the realm of physics? Every student is familiar with the basic formula that relates distance, speed, and time:

This is the formula for calculating the time of uniform and rectilinear motion. In this formula, t represents time, S represents distance traveled, and v represents velocity.

To learn more about the fundamentals of classical mechanics, please refer to our dedicated article.

By the way! We are currently offering our readers a 10% discount on any type of project

According to thermodynamics, time is considered irreversible due to the increasing entropy of a closed system. By the way, you can also read about the concept of entropy in our related material.

However, the most intriguing discoveries await us in the realm of relativistic physics. Here is a quote from Stephen Hawking, a renowned physicist who authored “A Brief History of Time.”

It is important to acknowledge that time and space are not completely separate entities, but rather are interconnected and together form a unified concept known as spacetime.

Additionally, in the field of relativistic physics, time is not considered an invariant quantity and can be influenced by the relative motion of reference frames. In other words, the passage of time is dependent on the movement of the observer.

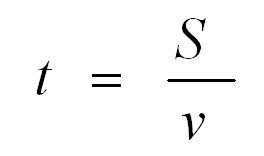

This phenomenon is referred to as relativistic time dilation. When an object is observed from a stationary reference frame, all processes within a moving object appear to occur at a slower rate compared to those in a stationary object. This is the reason why an astronaut traveling at high speeds in space will experience less aging compared to their twin sibling who remains on Earth.

Aside from the phenomenon of relativistic time dilation, there exists another type known as gravitational time dilation. But what exactly is it? Gravitational time dilation refers to a variation in the passage of time as observed by clocks in a gravitational field. The intensity of the gravitational field directly correlates with the degree of time slowing.

Let us remember that a second is defined as the duration it takes for a cesium isotope atom to undergo a quantum transition, specifically 9 192 631 770 of them. However, depending on the atom’s location (whether on Earth, in outer space, far from any celestial body, or in close proximity to a black hole), the value of a second will differ.

As a result, the passage of time in relation to a specific reference frame will vary. Therefore, an observer situated at the event horizon of a Schwarzschild black hole would perceive time as essentially stopping, whereas an observer on Earth would witness events occurring almost instantaneously.

Throughout history, the concept of time travel has captivated the minds of individuals. We invite you to explore a popular science film centered around this topic, and we would like to remind you that if you find yourself pressed for time with academic responsibilities, our student service is always available to assist you with current tasks and challenges.

Ivan Kolobkov, also known by the nickname Joni, is a marketer, analyst, and copywriter at Zaochnik. He is a talented aspiring writer with a passion for physics, unique objects, and the literary works of Charles Bukowski.

Imagine this scenario: You stumble upon an ancient computer tucked away in a storage room and decide to give it a whirl. Surprisingly, the computer boots up, even though it has been dormant for a decade. However, there’s a catch: the system insists that today is January 1, 1970. This peculiar situation sheds light on how computers maintain accurate timekeeping. Unlike traditional clocks with springs or pendulums, computers lack these mechanisms to count down seconds. So, how do they manage to keep time, even after being powered off for several hours? And why does this principle fail when a computer remains untouched in a closet for ten long years?

Before we address these inquiries, let’s grasp the concept of measuring time accurately. It’s not so straightforward if you don’t have a timepiece on hand. One option is to tally up the days, months, and years, or roughly break down the day into hours. However, determining a reference point that indicates the exact number of elapsed seconds is considerably more challenging.

It is widely believed that Galileo Galilei, an Italian scientist, was the first person to solve the time measurement problem in the 17th century. Galileo was in need of an accurate way to measure the duration of an experiment. According to his biographers, he initially tried counting his own heartbeats, but one day while observing a swinging candelabrum in the cathedral of Pisa, he noticed that each oscillation of the candelabrum coincided with the same number of heartbeats. This number remained constant even as the amplitude of the pendulum’s movements diminished. It was in this moment that Galileo recognized the potential of pendulum oscillations in providing precise time measurements.

Is it possible to utilize the oscillations for time calculation in a computer?

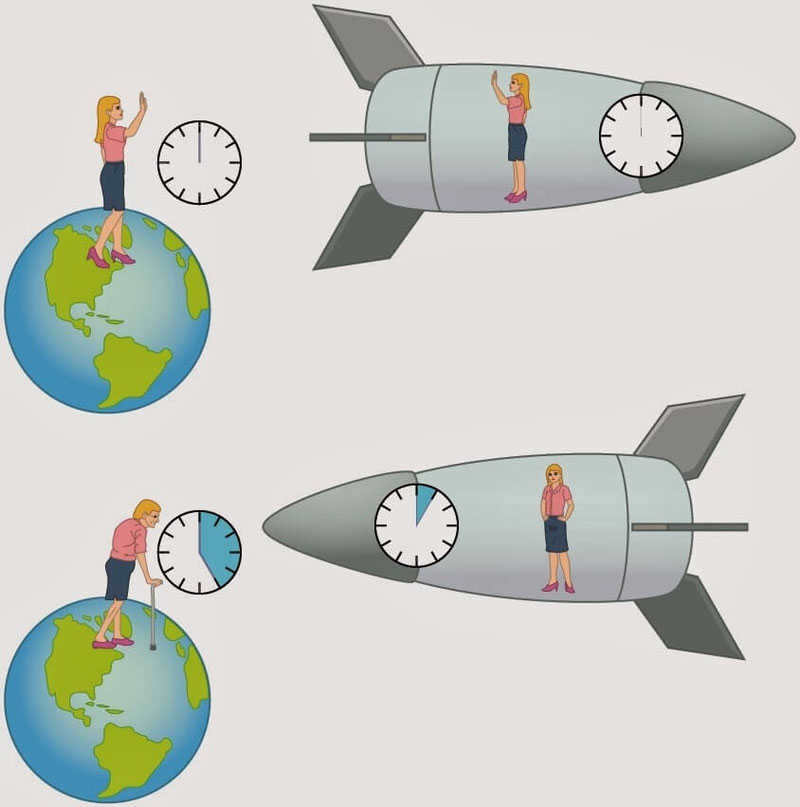

In the 70s-80s, engineers were faced with the challenge of displaying human time on computers. Surprisingly, they turned to the works of an Italian scientist, but approached them from a different perspective. While the pendulum was a popular source of oscillation, engineers discovered that the central processing unit (CPU), or processor, in modern personal computers also emitted uniform electrical oscillations when current flowed through it. These oscillations, measured in hertz (Hz), determined the number of operations the processor could perform in one second.

However, using every operation to increment the oscillation counter would render the processor unusable for other tasks. To address this issue, the quartz oscillator was invented. This thin silicon wafer expands and contracts uniformly under electric current, generating a weak electric charge on its surface. As a result, the mechanical oscillations of the plate are accompanied by synchronous and uniform oscillations of the electric charge.

The resulting regular movements are referred to as a timer – they enable the processor to synchronize its operations in time. Thus, the computer possesses its own comprehension of time.

Transitioning from computer time to human time

It appears that there exists a timer within the computer, but it does not measure human time – it is essentially useless for that purpose. Typically, this responsibility is assumed by operating systems (OS). Since the OS is aware of the frequency at which the crystal oscillator is functioning, it is able to measure the duration between timer initiations (a timer initiation is known as a tick or jiffy). If the oscillator operates at a frequency of 100 Hz, the interval between ticks amounts to 1/100th of a second or 10 milliseconds. The operating system generates a variable in memory, usually referred to as jiffies, and increments it by one each time the processor signals a new tick.

Therefore, in order to determine the duration of the computer’s operation, the system simply needs to multiply the duration between each tick by the total number of ticks. And to determine the current time, you just need to add the elapsed time to the time when the system was first started. But how can you determine the human time at the moment the system was initiated?

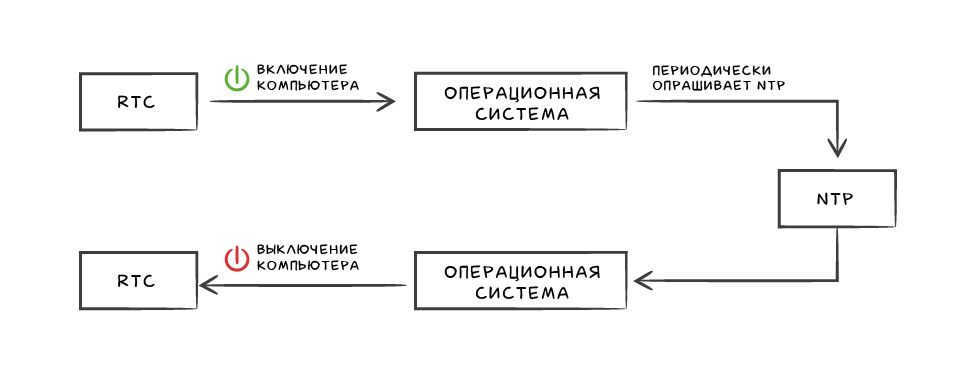

RTC or real-time clock

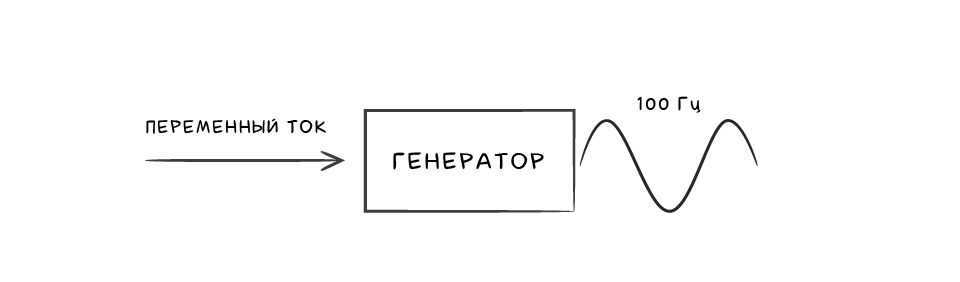

The initial personal computers, such as the IBM PC or Apple II, lacked the capability to keep track of elapsed time after shutdown. They would require the time to be inputted upon startup. To solve this problem, the quartz oscillator was utilized once again. The source of electrical current was irrelevant to the device, leading developers to the idea of connecting the oscillator to a standard lithium battery to achieve consistent electrical oscillations.

By incorporating a binary counter into this circuit, which increments with each oscillation, a device capable of recording human time is created. This device is known as an RTC (Real Time Clock), or real-time clock. The typical frequency of oscillation at the output of an RTC is 32,768 Hz or 2^15 Hz, which is ideal for use in binary counters.

The time on the old computer mentioned earlier became inaccurate due to a faulty battery in its real-time clock (RTC). When the computer restarted, it was unable to retrieve the correct time from the RTC, causing it to default to January 1, 1970 as the initial time setting.

Network Time Protocol (NTP)

RTC (Real-Time Clock) enables a computer to accurately count milliseconds, even when it is powered off. However, standard RTCs have a margin of error of 1.7-8.6 seconds per day, meaning they can lose an hour in a year.

We often encounter this issue in everyday life, such as manually adjusting the time on a quartz wristwatch or a microwave oven, both of which also rely on RTC. However, computers no longer require manual time adjustment. Instead, they synchronize their time via the internet using the Network Time Protocol (NTP). NTP takes into account the transmission time between the time source and the computer, compensating for any discrepancies. On a public network, the error margin is only 10 milliseconds.

Even when our computer is powered off, the real-time clock (RTC) continues to function and keeps track of time. However, when we turn on the computer and start the operating system, it retrieves the time from the RTC and begins its own time tracking using the CPU timer. Occasionally, the operating system synchronizes its internal clock with the exact time provided by the Network Time Protocol (NTP). Finally, when we shut down the computer, the RTC takes control once again.

Timestamps and epochs

As previously mentioned, in order to keep track of human time, the operating system generates a variable called jiffy in the computer’s memory, which stores the number of ticks since the system was started. However, how can this be used to display a calendar to a human?

Unix timestamp and epoch

In the 1970s, engineers from Bell Labs addressed this issue while developing the Unix operating system (which served as the foundation for modern Linux and MacOS). They implemented a variable in the system that, starting from a specific date, increments for each tick of the generator – this variable was named epoch.

This variable was a 32-bit signed integer, ranging from -2,147,483,648 (-2^31) to 2,147,483,647 (2^31-1). The majority of oscillators during that time operated at a frequency of 60 Hz, meaning there were 60 ticks per second. Therefore, this variable represented a time interval of 1/60th of a second and could not exceed 829 days.

In the 1971 version of Unix, the countdown began on 1971-01-01 00:00:00:00. The following year, it started on 1972-01-01 00:00:00:00. However, it was inconvenient to translate the time every year. Therefore, in the fourth version of Unix in 1973, the epoch was set to 1970-01-01 00:00:00:00, and the variable started storing a full second instead of 1/60th of a second. This approach later became an international standard and is still in use today.

If your computer has a Linux or MacOS operating system installed, you can check the current Unix timestamp by entering the following command in the terminal:

Windows also calculates time based on a specific date, but it uses 1601, which is the first year of the Gregorian calendar, instead of the abstract year 1970.

The issue lies in 2038

The problem at hand arises from the fact that time is stored in a 32-bit integer variable, which can only represent a limited number of seconds from a specific point in time. The maximum number of seconds that can be stored is 2,147,483,647 (2^31-1). When we add this number of seconds to the epoch, we arrive at January 19, 2038 03:14. However, what happens when this day arrives and another second passes?

To find the answer to this question, let’s examine a characteristic of 32-bit variables:

The binary representation of 2,147,483,647 is:

01111111111111111111111111111111 // (1 zero and 31 ones).

If we increment this number by one, it will become:

10000000000000000000000000000000 // (1 one and 31 zeros).

The Year 2000 Problem or Y2K Bug

“640 kilobytes of memory should be sufficient for anybody,” – Bill Gates.

In reality, a similar situation has occurred before in history. During the 1960s and 1970s, when people were just beginning to write software for computers, memory boards were costly, and most computers only had a few kilobytes of memory. To conserve memory, programmers opted to write dates in the format DD.MM.YY.

What issue did this create? Let’s consider a scenario where we have an individual named Bob whose birth date is listed as 01.11.19. He was actually born in 1919 and is now slightly over 100 years old. On the other hand, we have a man named Fred who was born on 02.11.19, but he is just two years old because his birth year is 2019. While a human can understand the correct interpretation based on context, a computer lacks this capability when presented with this date format.

During the 1960s and 1970s, programmers did not anticipate that their code would still be in use in the year 2000. As a result, they commonly used a two-digit year format as a means of optimization. However, as the new millennium approached, panic ensued as many companies were still utilizing this date format. The implications of the year 99 transitioning to 00 were unclear and rumors began to circulate worldwide. For instance, some believed that ATMs would spontaneously dispense money and airplanes would begin to crash. Fortunately, the issue was identified in advance and most systems were able to rectify the problem in a timely manner.

Peculiarities of working with local time and time zones

Reasons to Avoid Manually Coding Time Descriptions

Imagine a hypothetical programmer tasked with creating an application that sends a daily notification to users in London at 12 noon to remind them that it is noon. This programmer resides in London and will only be developing the application for use within the city. Initially, when coding during the winter months, there is no discrepancy between London’s local time and UTC (Coordinated Universal Time).

Surprisingly, the app is gaining popularity. After some time, the developer receives feedback from an irate user in New York: he complains that notifications are arriving at 7 am instead of noon. New York falls under the ETC (Eastern Time Zone) time zone, which is UTC -05:00.

It’s not as simple as setting a timer for different time zones based on the server time. To address this issue, the developer has implemented a time zone selection during registration and modified the scheduler to start a few hours earlier or later for each user, depending on the information provided during registration.

Currently, everything appears to be functioning properly – however, in November, users based in Moscow began reaching out to the developer with grievances regarding notifications being received at 11am. The issue stemmed from the fact that while the UK observed a transition from winter to summer time, Russia did not. This situation caused the developer to grow increasingly anxious, leading them to implement a code adjustment that exempted Russian users from undergoing a time transition. Meanwhile, the application continued to gain traction.

In March, the programmer received a notification at two o’clock in the afternoon that they had been contacted from Sydney. This was because in the southern hemisphere, March marks the transition to winter time rather than summer time. The programmer had no choice but to update their code to account for this reverse time change. The application quickly gained popularity worldwide, leading to complaints pouring in from various corners of the globe. For instance, users in Palestine would complain whenever their government switched the clocks from summer time to winter time, which occurred at different times each year. In 2011, there was an interesting incident when users in Samoa experienced a leap into the future, crossing the date line and finding themselves on December 31, 2011, after December 29. A year later, the programmer received a call from the International Earth Rotation Service, informing them that they needed to make adjustments to the application because this year would be one second longer than the previous year due to the addition of an extra second. The idea of coding all these complexities seemed utterly insane, so the programmer decided to shut down the project and never write code again.

Tips for Effectively Managing Local Time

To avoid encountering similar challenges, developers can take advantage of the knowledge and solutions already available in the public domain. Almost every programming language offers libraries that specialize in handling local time and time zones. Additionally, there are comprehensive databases that store historical and current information about local time.

Here are some recommended approaches for developers:

- Delegate the responsibility of time calculations to reliable libraries and databases.

- Instead of storing just the time difference with the user’s local time, store their time zone according to the IANA classification. For example, use “Europe/Moscow” instead of a numerical offset like +3.

A few pointers on how to avoid issues when working with local time

There are numerous scenarios where the order of events is crucial and should not be affected by changes in time zones or daylight saving time.

Let’s say you’re tasked with developing a service that handles bank transactions. The functionality is simple: one user sends money to another user via a bank transfer, and either user can access information about all transfers. In this case, it’s important to store details about the sender, recipient, date and time, and amount of money transferred. Furthermore, this information should be displayed in the user’s local time, while ensuring that transactions in different time zones are properly sequenced.

Let’s consider the developer’s experience mentioned in the previous example and utilize the library instead of manually describing the time. This will present us with a series of inquiries:

- Which time zone should we use to store transactions if our clients reside in different countries and the database is located in a third country?

- How should we handle daylight saving time and winter time adjustments?

- How should we account for the additional second?

- What should we do if a user has relocated from one country to another?

The optimal solution is to take no action. There is no need to consider customer time zones, summer and winter time transitions, or user relocations. When absolute time is required, utilizing UTC is sufficient.

Almost all libraries and databases support UTC time. By using UTC, we can receive a transaction in the user’s local time, convert it to UTC, and store it. This ensures that the transactions are always in chronological order.

However, there is a drawback with UTC. It accounts for leap seconds, which means that we might encounter transactions like this:

- Masha transferred 100 rubles to Fedya on December 31, 2016, at 23:59:58

- Fedya transferred 100 rubles to Yura on December 31, 2016, at 23:59:59

- Yura sent 1$ to Michael on December 31, 2016, at 23:59:60

- Michael sent 99$ to Ted on January 1, 2017, at 00:00:00

It is unlikely that a UTC program will be able to determine the chronological order of the second, third, or fourth transactions. Google, a company that places great importance on the precise sequencing of events over time, has devised an interesting solution to this problem. Instead of adding an extra second at once, their precision time servers distribute it evenly throughout the day at the start of the year. This technique is known as leap smear. Therefore, when astronomers announce an additional second once a year, Google’s precision time servers deliberately lag behind UTC throughout the day in order to accommodate the extra second.

Conclusions

- Every modern PC is equipped with a processor that has the capability to oscillate at regular intervals.

- By knowing the frequency of these oscillations, the computer’s operating system is able to measure human time.

- Through the use of the NTP protocol, the operating system can synchronize its clock with time servers.

- When the computer is powered off, a specialized device called the RTC, which is powered by a battery, keeps track of the time.

- An epoch is a specific date from which the counting of time forwards or backwards begins. Different operating systems and programming languages can choose their own epoch.

- A Unix timestamp represents the number of seconds that have passed since the Unix epoch, which occurred at 00:00 on January 1, 1970.

- It is never advisable to write code that directly deals with human time.

Additional Resources

This lesson will discuss various units of time, including the hour, minute, and second. Additionally, we will explore the historical and contemporary tools utilized for measuring time. Furthermore, we will acquire the skills necessary to read an analog clock.

At the moment, it is not possible for you to view or distribute the instructional video to students

In order to gain access to this and other video lessons included in the package, you will need to add it to your individual account.

Unlock amazing possibilities

Units of time measurement and determining time with a clock

Hello, boys and girls! I am here once again – Professor Cypherkin. Let’s begin our session today with a question: “What moves faster than a thought and has no beginning or end?”. Any guesses? It’s time.

Although time itself cannot be seen or touched, it can be measured. Today, we will discuss the various units of time measurement, such as hours, minutes, and seconds. We will also explore the tools that were historically used to measure time and are still used today. Additionally, we will learn the essential skill of reading time from a clock.

Throughout history, humans have utilized a multitude of timekeeping instruments to gauge the passing of time. An ancient and enduring device in this regard is the sundial. These sundials consist of a dial on which the shadow cast by the gnomon (or hour hand) shifts. As the day progresses, the direction and size of the shadow alter accordingly.

However, utilizing such timepieces on overcast days or during the nighttime was unfeasible. Therefore, individuals endeavored to create novel contrivances to alter the measurement of time. This is where the water clock emerged as an innovative solution. Water would gradually drip from one receptacle into another, enabling one to ascertain the passage of time by observing the amount of water that had dripped out.

Hey guys, did you know that the very first alarm clock in the world was actually a water clock? It worked by having water flow from the upper vessel to the lower vessel, causing the air to be displaced. This air then traveled through a connected flute, creating a whistling sound. The brilliant mind behind this invention was none other than Plato, an ancient Greek philosopher who lived long before our time.

After that, there were candle (or fire) clocks that came into existence. They were designed as thin candles with a scale printed along their length. As time passed, equal parts of the candle would be burned at equal intervals, helping to indicate the passage of time.

These clocks not only served the purpose of telling time, but also provided illumination during the night. The candles used for this purpose were approximately one meter in length. However, it should be noted that these clocks were not particularly accurate.

Time measurement was not limited to the use of the sun, water, and fire. An alternative method was the hourglass, which consisted of two transparent vessels connected by a narrow hole.

In this device, sand from the upper vessel would slowly pour into the lower vessel over a specific time period. Once all the sand had transferred, the hourglass would be flipped over and the process would begin again.

Eventually, mechanical clocks took the place of hourglasses. These clocks, aptly named for the mechanism inside that moved the hands in a circular motion, were considered a marvel of their time.

However, individuals did not halt their progress and persisted in enhancing time-measuring instruments. At present, the most frequently used timekeeping devices are watches. Electronic clocks are timepieces that display the time utilizing numerical digits on a scoreboard.

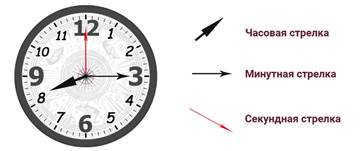

Determining the time is a straightforward task with such clocks. However, it becomes more complicated when dealing with a clock that has a dial and hands. Let’s examine a clock of this nature. It boasts three hands. The hour hand is the shortest and thickest, while the minute hand is the longest and thinner. Lastly, the second hand is the longest and thinnest, as well as the fastest. As the minute hand completes a full rotation, it only moves one division. This particular timeframe is referred to as a minute.

Take note that instead of using the word “minute”, it is written as “min”, and instead of the word “second”, it is represented by a lowercase “s”. There is no need to include periods after these abbreviations.

The hour hand moves at an even slower pace. It will transition from one numeral to the next in the same amount of time it takes the minute hand to complete a full revolution. This specific duration is referred to as an hour.

Keep in mind that the word “hour” is replaced by a lowercase “h”. There is no period following it.

Let’s observe the clock and attempt to determine the current time.

Therefore, at this moment, the hour hand, which is small and thick, is pointing to the number 2, while the minute hand, which is longer and thinner, is pointing to the number 12. This indicates that the clock is showing exactly 2 hours.

Keep in mind! When the minute hand is pointing upwards, specifically at the number 12, we determine the time solely based on the position of the clockwise hand.

A clock where both the hour and minute hands are pointing upwards indicates that it is 12 o’clock.

Now let’s carefully examine the clock face once again. It features dashes, totaling 60 in number. The spaces between these dashes also amount to 60. The minute hand traverses one of these spaces within a single minute. It is well known that there are 60 minutes in an hour. Consequently, within an hour, the minute hand passes through all 60 spaces.

It is worth mentioning that there exist 5 spaces between the lines that connect any two neighboring numbers on the dial. This signifies that the minute hand will take 5 minutes to move from number 12 to number 1. Similarly, it will require 5 minutes to travel from number 1 to number 2, and so forth.

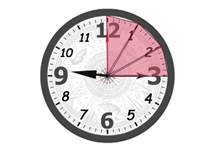

The hour hand is pointing to the number 9. The hour hand is now pointing to the number 3. By observing the hour hand, we can determine that after it reached 9 o’clock, the minute hand moved away from twelve by 5 minutes, then another 5 minutes, and finally another 5 minutes, totaling to 15 minutes. Therefore, the clock is showing 9 hours and 15 minutes.

Since it was already 9 o’clock and the hour hand is slowly moving towards the number 10, we can conclude that this clock is showing 15 minutes past 9.

Let’s determine the exact hour that this clock is displaying.

The time on the clock indicates that it was recently 6 o’clock. The position of the minute hand is pointing to the number 4. Let’s calculate the number of minutes it has moved away from the 12 o’clock position. It has moved 5 minutes, then another 5 minutes, then another 5 minutes, and so on, for a total of 20 minutes. Therefore, the clock shows the time as 6 hours and 20 minutes. Alternatively, we can say that the clock shows 20 minutes past seven.

Now, let’s examine this particular clock.

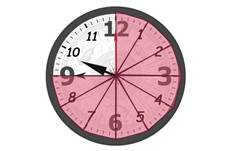

The indication of the hour hand suggests that it is currently 3 o’clock. The position of the minute hand indicates that it is pointing at the 6. To determine the number of minutes that the minute hand has traveled from the 12, let’s count in increments of 5. So, we have 5, 5, 5, 5, 5, 5, 5, 5, and 5 more, which adds up to 30 minutes. Therefore, the clock is showing a time of 3 hours and 30 minutes.

It is important to note that it is not customary to express the time as “30 minutes past four.” This is because one hour is equivalent to 60 minutes. Therefore, 30 minutes is considered half an hour. In other words, we can say that the time shown on the clock is “half past four.”

On our watch, it was already 3 o’clock, and an additional 30 minutes have passed. Therefore, we can conclude that the clock is indicating a time of “half past four.”

Let’s take a closer look at this particular clock.

The time is indicated by the position of the hour and minute hands on the clock. In this case, the hour hand is pointing to the 9 and the minute hand is also at the 9. Therefore, we can conclude that it is 9:45.

However, counting the minutes in this manner can be quite cumbersome. Instead, let’s try a different approach. Let’s count backwards from 12 in increments of 5 minutes, but in the opposite direction of the hands. By doing so, we can determine how many minutes are left until 10 o’clock.

So, if we count 5 minutes, then another 5 minutes, and yet another 5 minutes, we find that there are 15 minutes remaining until 10 o’clock. Therefore, we can say that the time on the clock is fifteen minutes to ten.

Hey there, folks! Look at this amazing clock. It’s almost time for twelve, as it’s just 5 minutes away from the stroke of noon.

My dear companions, when we observe a conventional clock, we notice that it only displays numbers ranging from 1 to 12. However, we are all aware that a complete day consists of 24 hours. This implies that the hour hand on the clock completes two full rotations within a single day, signifying that the day can be divided into two distinct halves. Consequently, if we receive information regarding a bus departing from a bus stop at 2 o’clock, it is essential to specify whether this departure occurs during the first half of the day (specifically, at 2 o’clock in the morning) or the latter half (at 2 o’clock in the afternoon).

To avoid any confusion, the twelve-hour format is often replaced by the twenty-four-hour format. In this case, the time in the second half of the day is not “1 o’clock in the afternoon” but 13 hours, and not “9 o’clock in the evening” but 21 hours. In the first case, you add 1 to 12 and get 13, and in the second case, you add 9 to 12 and get 21.

Now, how do we determine the time in hours if we are told that the train arrives at 19 hours? In this scenario, we subtract 12 from 19 and get 7. Therefore, the train arrives at 7:00 p.m.

A clock with an electronic display typically shows the time in the twenty-four-hour format. Using such a clock can help you tell the time accurately without making any mistakes.

It is referred to as a stopwatch, a device that measures time. The larger hand on the stopwatch tracks seconds, while the smaller hand tracks fractions of seconds.